I sat out to finally prove something that I’ve been saying for a long time: X264 Slow is not as magical as people say it is, and it’s definitely not worth the performance tradeoff for 99% of streamers. Oh, and AMD’s streaming benchmark at E3 2019 was a really terrible showing. I think I did a good job here and after many more hours than I’d prefer to admit, I’m ready to show you why.

Be sure to check out this video’s sponsor: Tubebuddy.

What I hope to clear up here is that the problem is not “Slow is too intense so no one uses it, thus it’s a bad benchmark” but rather that “Slow literally should not be used for this purpose.”

This is a follow-up to Gamers Nexus’s video: Explaining AMD’s Misleading Marketing: X264 Stream Quality & Benchmarks.

[Sorry for taking so long to get this published. This video has been my obsession for the past 72-96 hours, non-stop. It takes a lot of time for things to process for these tests, I had to scrap my original test runs, then it took about 7 hours to edit the final video (plus scripting the video, ~45 minute raw a-roll take), and then about 5-6 hours of fighting render crashes/out-of-VRAM errors in Resolve (I really need a Quadro RTX 5000 or higher or a Titan RTX…) before I could even start uploading it to YouTube to process. I really wanted this out quicker, but there was just nothing I could do.]

There’s a lot that went into this particular project that was too much to cram into this video (crazy to think, given its length) so I have ALL the nerdy details for this post, so I hope you’re ready.

MISLEADING VS LYING

(Timecode 02:59)

Something I wanted to address was the fact that I agree with GN Steve that AMD’s streaming benchmark was a tad misleading. Some seem to be really stuck on the idea that since AMD didn’t outright lie in the presentation, or disclosed some of the settings used that it somehow can’t be misleading, which just isn’t true.

And there are some who feel that since Intel has done some weird misleading marketing in the past that AMD should somehow be free from criticism here? Even though GamersNexus was far more harsh on Intel’s marketing mishaps and for a much longer amount of time. So no.

While yes, to the nerdiest of audience members out there who knew 100% what everything meant and could read between the lines, those people could discern the specific situation AMD’s streaming benchmark was applied to and know that it doesn’t realistically apply to all streaming situations. But for a general press conference where information will be disseminated down the grapevine with nuance lost along the way, the implication given by AMD’s showing was that the 9900K is not capable of game streaming. Directly, that’s a problem.

However, even for the people out there who think they “know better”, there are some that will now normalize X264 slow as the default or the target setting that you should stream at, and thus hardware that can’t do so is not the right choice, which ends in virtually the same conclusion.

I’ve seen this time and time again. People get these silly misconceptions based on marketing fluff or outdated ideas (see: Elgato vs AVerMedia in the public perception of those who haven’t used it) and it gets messy. These very kinds of things are why I avoid diving into forums and r/Twitch, etc. to help people out – even though it would be great for my brand – because there’s just too much misinformation that I can’t fight other than by making content and hoping the right info gets put out there.

Virtually every streamer I’ve seen brag about pushing Slow for their stream were switching from something like VeryFast, so the quality jump seemed more significant, and they usually wind up backing it off to Medium once they encounter a more difficult-to-encode game.

Slow is not a realistic situation, it’s meant for post-production final video mastering, not live game streaming, and when used live virtually always requires fine-tuning on any machine, even something as beefy as my 18 core i9. Leaving it to run free and utilize all of your threads is a foolish decision which will always net you worse results. Many of Slow’s options are wasteful for live environments, too.

Also working against AMD is they oddly chose 10mbps for their bitrate – at which point, my testing proves that even VeryFast is of similar quality to Slow, so using slow was still a very poor benchmark choice.

Plus the entire part of that event left out that their GPUs were at almost full utilization, which leads to GPU-based render and compositing issues, as OBS needs GPU overhead to operate, dropping more frames from that alone, which also causes more encoding problems. And when you consider that the AMD CPU side still dropped nearly 3% of frames with GPU utilization that high, the actual player experience would be jittery or stuttery as all hell and just a poor experience. This… was just not a good benchmark, period. Despite the fact that their CPU would clearly obliterate the 9900K in single-PC streaming scenarios.

METHODOLOGY

(Timecode 06:34)

A common complaint of GN’s stream testing is that they don’t use fast-paced games like shooters, which stress the encoder much more, especially at lower resolutions. This is actually for a reason, as their testing was performance-based. Their goals involved discussing single-PC streaming setups where performance is tested for streaming while gaming, where you cannot replicate the exact same scene in a live gameplay of something like Apex Legends or PUBG for multiple benchmark runs.

That’s part of the reason I had reached out to give input to GN for this video, hoping they would do one. I don’t have the interest nor patience for performance benchmarking and they already had the setup and capacity for such testing, so they were perfect to take charge on that side.

My testing, however, is 100% replicable using the same source file for all of my encode passes. I had to scrap the original process I had been working on for a couple months, which involved recording the file playback in OBS to compare, as there will always be occasional dropped or missed frames (even if not reported by OBS) that will send the files out of sync, and the start/end times will never match up, making scientific comparison impossible.

So for this project I started over with a 1440p Apex Legends gameplay recording (I haven’t played in a month and have since changed my mouse DPI settings, so I’m rusty, okay?) recorded completely losslessly in UtVideo with no dropped frames in OBS to a NVMe drive. This file is completely overkill, but gives us a perfect source master, it’s about 2.3 gigabits per second. I then transcoded the file using FFMPEG to a lossless ProRes file scaled to 1080p, since that’s what our OBS tests are at and comparisons need to be of the same resolution.

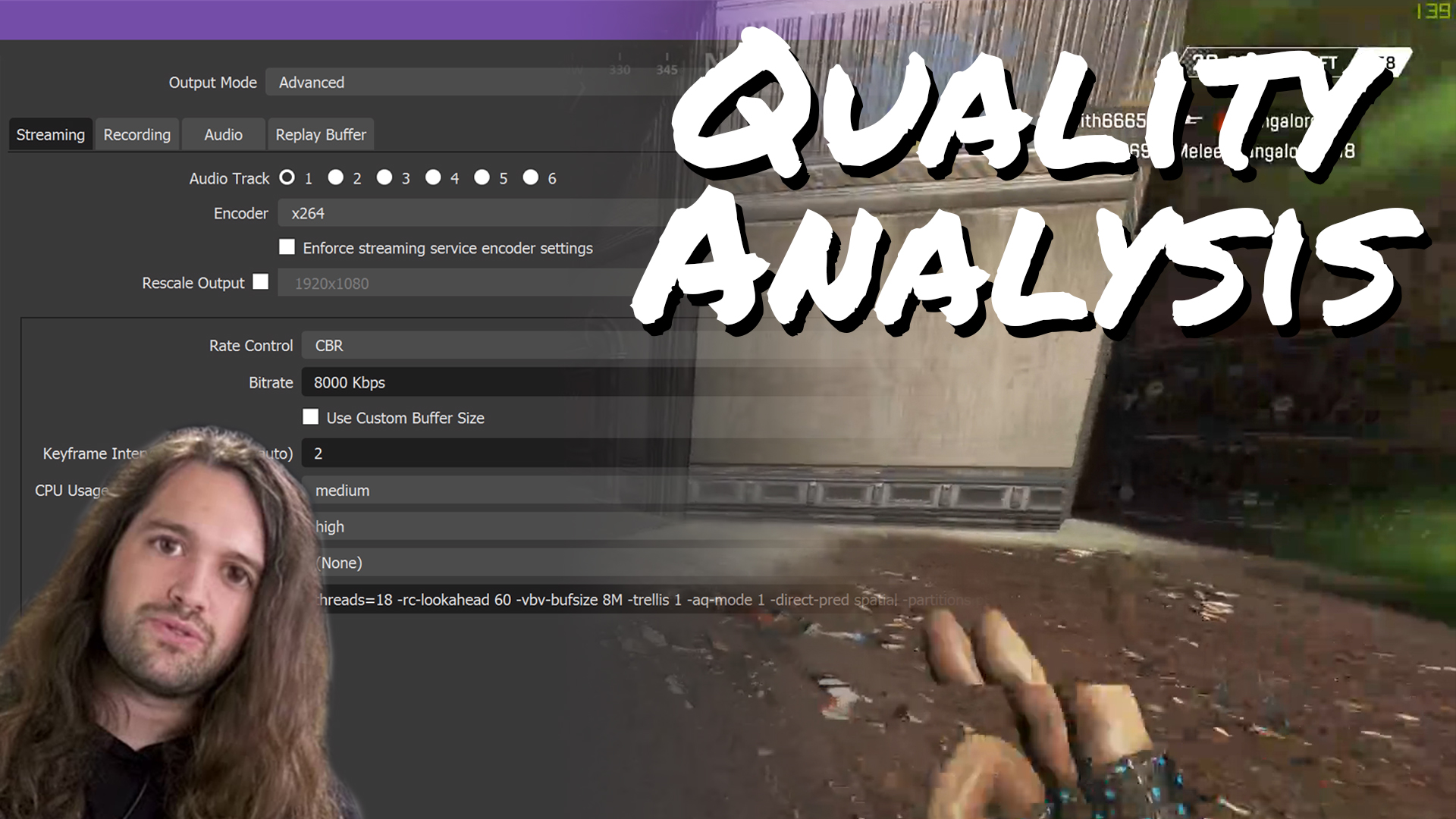

Then I used FFMPEG with some automated scripting to create transcodes of the file in the different CPU usage presets, and in NVENC on my RTX 2080 in my gaming rig, at various bitrates for comparison. I also ran some encodes using my “secret sauce” X264 settings that I use for my OBS Studio streams to see how much they actually improve over the raw presets.

Next, I repeated all of these encodings, but with a direct ProRes recording at 1080p of a PS4 Pro game, XMorph Defense, to rule out 1440p to 1080p scaling as a cause of issues. That actually ended up being harder to encode than Apex Legends, funny enough.

Then I used FFMPEG’s PSNR and SSIM comparison tools to generate data about how the files compared to the original, charted in a spreadsheet. I used this data, as-is, for comparison – but in the future I’d like to feed the PSNR results into a Bjontegaard DBPSNR analysis formula to analyze the bitrate differences and bitrate efficiency differences between everything.

(Microsoft Office’s installer refuses to run on my production PC and while I got it running on my gaming PC after about 2 hours of troubleshooting, I then ran into issues getting the Bjontegaard spreadsheet working properly and decided the time cost was already too high and not worth pursuing for now.)

The problem is, PSNR and SSIM, while being great analysis tools, represent a machine’s interpretation of artifacting, quality, and bitrate usage and can provide some surprising results which aren’t in line with a human’s perceptual quality comparisons.

So to tackle this, I looked into Netflix’s “VMAF” tools. VMAF stands for “Video Multi-Method Assessment Fusion” and aims to accurately analyze video image quality based on how the human eye would perceive it, to best allocate bandwidth-per-quality for Netflix’s streaming service. This is available open source here and I used this guide to get it running on Windows in minimal time. (Meanwhile this guide to manually compile/build VMAF on Linux kept netting me many errors.)

The VMAF process will actually let you calculate PSNR, SSIM, and VMAF all at one time, but I didn’t learn that until I had already done all of my PSNR/SSIM analyses (via FFMPEG).

It’s worth noting that later in the video/post you will see some VMAF numbers that place some “SECRET SAUCE” files significantly higher than the other files in those graphs. While the files were better quality, this is due to the “SECRET SAUCE” files being 720p versus the 1080p files they were compared to – VMAF factors resolution into its quality numbers, as it considers screen size and viewing distance an important factor in perceived image quality (as it should). (I explain what “SECRET SAUCE” means in the video/later on.)

You can find the scripts I used for this process on my Github. I’ve explained what each script does. They are scattered because I did different tests runs and my process evolved as I went. I started automating batch jobs of encodes, conversions, analyses, etc. – but the whole process could actually be automated from start to finish if you knew what you wanted to do from the beginning. The only major hiccup I had was with saving/retrieving the analysis numbers. I simply had to scroll up and match the numbers to the corresponding files for each run. This is not ideal, but wasn’t worth the time investment to learn how to solve this in the moment.

You can find my spreadsheet with all of my raw data here.

As mentioned, download links to all the sample clips and a clean H265 encoding of this video will be made available to Patreon, DonorBox, and Ko-Fi contributors, as well as Twitch subs and YouTube channel members. Signup Links:

- Patreon – https://patreon.com/eposvox

- Ko-Fi – https://Ko-fi.com/eposvox

- DonorBox – https://donorbox.org/eposvox-monthly-membership

- Channel Membership Signup – https://geni.us/v6PX

- Paypal Direct – https://paypal.me/eposvox (mention in email that you want a link to the files)

- Join us on Discord – https://eposvox.com/discord

Side note: There are GUI-based applications designed to present image quality analysis, even using VMAF, that provide real-time graphs and overlays and etc. to help with visuals – but licenses for them are very expensive. Three I know of are VQMT, Zond 265, and AccepTV’s Video Quality Analyzer. I can’t directly speak to how well they may or may not work, but I hear good things about VQMT, at least. I couldn’t obtain a license for this video, but if anyone wants to sponsor one for our work, I can include it in future experiments.

COMPARISONS

(Timecode 08:36)

NOTE: My website heavily compresses the screenshots here. I have uploaded uncompressed PNG frames to imgur for viewing, but these are still scaled to the video canvas and intended for samples/supplement references to the video, only.

Throughout the video, I provide many visual comparison points. I will summarize some thoughts here with some screenshots, but I’m relying on video-watching here.

6 megabits per second is the highest bitrate officially supported by Twitch. While you can technically push 8 with no major reports of punishment so far, that’s supposedly meant to be reserved for some partners and is not officially listed as supported anywhere. Plus, it’s a more realistic goal. So let’s start with that.

At 6 megabits per second for 1080p, you can easily spot a pretty significant upgrade dropping from VeryFast down to Fast. (Yes, I’m skipping Faster.) VeryFast can get outright blocky in some scenarios and is otherwise pretty blurry all around for in-game textures and details, and sometimes shifts colors a little weirdly. Fast brings a lot of that detail out and you can immediately tell it’s a cleaner image.

Moving from Fast to Medium, however, is a much less significant jump. For far more processing power, you’re only getting a small gain in sharpness to the overall image, and only a small reduction of blocking or pixelation, depending on the scene. It still looks nicer, but the curve of diminishing returns is already rearing its head.

Dropping down to Slow, and… you’d be forgiven for not even noticing I’d changed it at all. In some sections you might see a little added sharpness, but then you also start to see sometimes more blocking than you saw before. It’s not a magical all-cure for quality, by any stretch. In some cases you’re not even getting less blocking, just.. Different blocking. The optimizations that start to appear in Slow are mostly optimized for multi-pass encoding – something not possible during a live stream – or super low bitrate streams. Not helpful here.

Throwing in Nvidia’s Turing NVENC encoding, which also has RDO, or Rate Distortion Optimization, which Medium and Slow have, and in some scenes you’re absolutely getting less blocking than Medium. You sacrifice a bit of sharpness, but end up with a perceivably cleaner overall image. NVENC is optimized for the perceptual quality, versus quantifiable quality measurements like specific contrast sharpness detection, etc.

Pulling up some calculated PSNR, or Peak Signal to Noise Ratio, measurements, you can see similar results here. A high jump between VeryFast and Fast, but very minimal gains moving beyond Fast, especially when you consider how much more processing power – and tinkering of settings because when live you do NOT want to run these settings as they come out of the box, which we’ll cover later – is required to run these slower presets. Of course, as I mentioned, it calculates NVENC as being significantly lower, but this is due to differences in sharpness and where NVENC prefers to spend its bits to benefit perceptual quality.

[You will notice in all PSNR graphs that the average PSNR for NVENC is significantly lower than any of the X264 presets. This is inaccurate, as every NVENC encode I did had some sort of DTS timecode error that cause the file to de-sync from the source/X264 files about halfway to two-thirds of the way through. I could not figure out how to fix this, but this caused the PSNR numbers to drop significantly, as it was no longer comparing matching frames. You can see the max PSNR is still high with the others, and later VMAF will tell a more accurate picture of NVENC’s performance.]

Another analysis method, SSIM or Structural Similarity index, more strongly shows the similarities between Slow, Medium, and Fast at this bitrate, as well.

According to SSIM, X264 Fast is 99.57% of the quality of X264 Slow. For PSNR, Fast is 98.92% of the quality of Slow. I can assure you from experience as a streamer, viewer, and educator and coach for other streamers, that the exponentially higher processing power required for Medium, and especially slow, is not worth the… 1% to half a percent gain in quality. Again, this isn’t fully accurate to perception, but you get my point.

[I used this site to calculate the percentage-of numbers because I’m a lazy garbage human who “majored” in maths/calculus in high school (magnet school) and can’t be bothered to do any math nearly 10 years later.]

Using XMorph Defense for these numbers has all of the results even closer together, as this game was surprisingly difficult to encode at a good quality (lots of particles and small details) and further shows that Slow doesn’t magically fix it.

Another criticism of GN’s benchmark was pointing out, as I’ve mentioned, that slower CPU Usage Presets are more beneficial for lower bitrate footage. But… this isn’t as significant as people seem to think. So let’s get a bit extreme with it. Let’s jump down to 2.5mbps. This is the “slow internet live stream” quality.

Now, you can’t get around physics. In my opinion someone who can only stream at 2.5mbps, and especially lower, should never be streaming at 1080p and will get way better results at 480p or so. I’ll cover scaling in a bit.

The jump from VeryFast all the way to Slow is clearly quite significant, but each stage from VeryFast to Fast, Fast to Medium, Medium to Slow, are very minimally different. Blocking is… different, slightly improves at each step, and sharpness starts to improve at each step. Bafflingly enough, here NVENC actually ends up providing a better-looking image by focusing less on sharpness and more about just not being so damn blocky, than even X264 Slow.

The video is so noisy at 2.5mbps that PSNR doesn’t help us much here, showing that these are all still quite close, but further away in quality difference than you’d actually perceive.

Meanwhile SSIM has these different presets being much closer, with Fast being exactly 99% of the quality of Slow at this bitrate. Slow is not worth it.

Medium to Slow? Again, small increase in sharpness, but different, not necessarily less, blocking, and more blocking in shadows as an attempt to increase sharpness. And this is another case where NVENC, while maybe not being quite as sharp in some areas, ends up looking cleaner than even Slow all-around, and sharper in some areas. I can’t make this stuff up, guys. Believe it or not, I’ve not been talking out of my ass for all of my videos on this subject.

Jumping back to the analysis numbers, PSNR puts a 96.71% difference between VERYFast (I switched presets here, as a note) and Slow, with SSIM putting only a 98.93% difference between VeryFast and Slow. Very close at 10mbps, making it really not a good flex of what the different usage presets can do at all.

Your average viewer on a PC will barely be able to tell a difference, a mobile viewer won’t even begin to care. And honestly the same applies to the lower bitrates, as well, as I’ve hopefully shown a bit, here.

If you’re playing more slower-paced games, then this is even further exaggerated, as some saw to complain about GN’s video, though IMO it still showed some differences with those benchmark levels.

Again, if you want to compare the individual files yourself to pixel peep and go frame by frame, the download is available to Patrons, Donorbox and Ko-Fi supporters, YouTube Channel Members, and Twitch subs. Or I guess you could make a one-time Paypal donation and request the link, too, if it’s enough? I don’t know.

VMAF

(Timecode 17:08)

Using a smaller sample size of clips, and a smaller chunk of that source clip – given it would have taken a couple more days and many more terabytes that I don’t have to spend to do the full run of VMAF testing, (my servers are ALL FULL – pls budget 14TB drives pls) let’s see what we can gleam here.

With VMAF, I feel you can interpret similar results as before, with NVENC perhaps conveyed a bit more accurately. At 2.5mbps 1080p you can see a significant jump of 5 points from VeryFast to Fast, but then a jump of less than 3 points from Fast to Medium, and then .8 of a point between Medium and Slow, as well. This puts X264 Fast at about 94% of the quality of Slow, for much less performance cost. VMAF places NVENC one point higher than Fast here.

At 6mbps, the jump from VeryFast to NVENC is about 7 points, or almost 8 points from VeryFast to Fast, but then the difference between Fast and Slow is only about one and a half points’ worth of quality difference.

(Note how my “secret sauce” encode at 720p, X264 Slow, 8mbps results in a near perfect VMAF score – I wasn’t kidding about having the best Apex Legends quality on Twitch.)

I also ran an analysis at 720p with some SUPER low bitrate files and things get weird here because this just isn’t enough bitrate. VeryFast actually scored a point HIGHER than Fast at 0.5mbps, but then Fast was 4.5 points higher than VeryFast at 1mbps. Meanwhile NVENC beats out both Fast and VeryFast at both of these bitrates.

Medium and Slow both top the 0.5mbps and 1mbps charts, with less than one point between them on each, netting about 4 points of gain above Fast on both charts. So it can for sure make a difference here, but it’s still a high curve of diminishing returns. Even at 0.5mbps, Fast is 81% of the quality of Slow – though that was an oddball with Fast being worse than VeryFast. At the same bitrate, NVENC achieves almost 89% of the quality of Slow. At 1mbps, NVENC achieves 95% of the quality of Slow with Fast achieving almost 93% of the quality of slow.

More than just CPU Usage Presets

(Timecode 20:13)

Hopefully by now I’ve made a pretty fair case about X264 Slow, and for some people even Medium, not being “worth it” for the minimal quality gains. Plus, 9 times out of 10, when I see someone bragging on Twitter about managing to stream Slow they pretty much always end up turning it down to Medium soon after once they play a different game and their stream doesn’t hold up.

But the thing is, people’s obsession with X264 CPU Usage Presets is a vast oversimplification of video quality optimization. This is not uncommon when it comes to mass dissemination of super technical details that are…. Not documented in the most newbie-friendly way. Much of what I’ve learned about X264 and FFMPEG’s various commands and flags and optimizations beyond the obvious has come from deep diving into video nerd forum posts from the early 2000s or early 2010s, or worse, Google Cached copies of old documentation pages, and pestering the OBS creator and devs, and some Nvidia team members. It’s not an easy or super accessible topic to get all of the knowledge for, and there’s certainly plenty of flags and commands and aspects of it that I haven’t bothered to learn because most of what I know is far too technical for what should be required for game streaming. But in my experience, you’re never just setting OBS to Slow and letting it run wild, it always needs tinkering. Hell, even the OBS team and the FFMPEG developers don’t recommend targeting anything slower than Medium for live game streaming.

We’ll cover some actual limitations in a moment, but let’s talk about other possible optimizations that get overlooked.

Chroma Subsampling

If you’re using a capture card input instead of software Game Capture, such as for consoles or from a 2 PC setup, getting a capture card that supports 4:4:4 RGB Chroma Subsampling can help improve image quality when scaling. This is basically the “color resolution” that affects how color detail is conveyed through the feed, and most gaming capture cards run at only 4:2:0 subsampling. Admittedly, your final stream to YouTube or Twitch or what have you will almost always be at 4:2:0, so the gains are minimal, but starting with the higher quality source when scaling or zooming in can help a bit. Most of the cards that support this are much more pricey than the gaming capture cards, but the AVerMedia Live Gamer 4K actually supports this for all modes, and their Live Gamer Ultra supports it at 1080p60. So this is why I use the Elgato 4K60 Pro for my 4K console inputs – I don’t need 444 RGB there – and the Live Gamer 4K for my PC input since I’m scaling from 1440p 120hz. You’ll need to change the decode mode of your card to RGB. Keep in mind all Elgato cards at the time of production do NOT support 4:4:4 and any listed RGB modes in OBS for them are simply emulated back up from the NV12 mode and are not something you should enable. I cover this more in my Capture Card Documentation resource, linked below.

CLARIFICATION: The Elgato CAM LINK and CAM LINK 4K support 4:2:2 YUY2. I forgot about those when I was scripting. I don’t see those as gaming devices. The HD60S/Pro’s YUY2 modes are probably usable, but I’ve been unable to confirm actual 4:2:2 color space. The point was, don’t use their XRGB modes. Use YUY2 or NV12 from Elgato.

Also, make sure you’re using your capture card’s best decode modes in the first place. By default, most cards enable MJPEG in OBS, but this is a higher latency and much lower quality mode, so if you have something like NV12, or even better YUY2 available (other than specific RGB-supporting cards like I mentioned) definitely use those modes instead.

[Check out my capture card reviews playlist for more capture card education, and my OBS Master Class to learn everything about OBS. Full OBS playlist with every video I’ve ever made on it.]

Color Space & RGB Range

Also make sure all of your devices and OBS are set to Rec709 color space. You need to match these between OBS and your devices, so pay attention.

What also needs to be matched between devices and OBS is your RGB Range – Full or Limited. Typically Full is used for PC inputs and Limited for consoles, but when in doubt or mixed environments, it’s usually best to go with all Limited. If you have these mis-matched between OBS and your devices, you’ll either get super washed out and ugly colors, or super dark and punchy contrasty colors which will be harder to see and compress.

Stop Over-Saturating!

Also I recommend AGAINST adding a contrast or saturation boost filter after the fact. This used to be really common with YouTube videos, but not only are you making your footage not look like it should be – when set up properly as just described, your capture card is capturing the footage exactly as your device puts it out – and software capture is 100% as produced, but higher saturation and contrast makes your footage harder to encode and thus looking worse during your live stream.

Scaling?!

Lastly, let’s talk about the thing that virtually everyone wants to disagree with me on, but I feel my examples here help prove otherwise… scaling. In my opinion, MOST PEOPLE should not be streaming 1080p resolution to Twitch, especially PC players. There’s just not enough bits to go around for that resolution, EVEN AT SLOW. I have created some samples at 720p using my “secret sauce” settings, which take some optimizations from Slow and Medium, but bypass some of the pointless performance-limiting flags from Medium and Slow. This can be applied to Fast, Medium, or Slow, and has netted me some of the best-looking Apex Legends streams on Twitch.

The options for OBS are formatted differently than FFMPEG.

Since I’m gaming at 1440p, I scale my capture card using Bicubic and run everything on OBS at 720p. This is an even integer scale of 1440p, whereas 1080p is not. Using non-integer scaling factors for video can cause further quality degradation, as you have more approximations being made about where details should be and jagged edges to compress, etc. This is also why I usually speak against using oddball resolutions like 900p or 960p, as they’re not an even scale at all.

Admittedly, I contradict myself in saying this, as I recommend a lot of people use 720p as they can get a cleaner feed. But 720p is not an even scale from 1080p either, but the bitrate efficiency pays off here, IMO. Thankfully 4K scales to both 720p and 1080p evenly.

720p through-and-through keeps scaling clean and image quality clear, but everything is a little soft. I think it’s worth the trade-off. What do you think?

In this example, I’m comparing bog-standard X264 Slow and Medium at 1080p vs my Secret Sauce config at 720p in Slow, Medium, and Fast. By eliminating some of the flags in X264 designed for post-production workflows, I’m able to squeeze out much more performance and even extend quality settings – such as extending rc_lookahead to a full second’s 60 frames instead of the 40 frames of Slow. Lookahead has major impacts on quality and I could theoretically extend it even further. While you can notice certain things, specifically text, look softer in my 720p footage than the 1080p footage, the overall image is significantly cleaner and more consumable and that is far more important to me. Plus since I push the rules a bit and stream at 8mbps, shown here, this reaches what YouTube produces for 720p videos, which is damn impressive. You do reach a point here, now, where more bitrate isn’t actually beneficial for 720p, which is a good spot to be at. Fine-tuning could probably be done to find a slightly lower bitrate for the same quality – though keep in mind that with X264, even with CBR, it doesn’t always push the bitrate you select, it’s usually lower. Bitrate efficiency of encoding settings is a whole new rabbit hole I won’t jump in for this video.

FFMPEG “Secret Sauce” config:

ffmpeg -y -r 60 -i lossless.mov -c:v libx264 -vf scale=1280:720 -preset slow -pix_fmt yuv420p -rc cbr -b:v 6M -maxrate 6M -bufsize 6M*2 -trellis 1 -aq-mode 1 -direct-pred spatial -partitions p8x8,b8x8,i8x8,i4x4 -threads 18 -rc-lookahead 60 -profile:v high -level 5.1 -bf 2 -g 120 -vsync 0 sauce720p6m_SLOW.mp4This is optimized for my i9 dual PC setup (though would work on similar core count CPU and single PC as well), but most of the options can be applied to slower chips on Fast/Medium removing/modifying the thread allocation.

Note that FFMPEG’s commands work differently than OBS so they’re formatted differently.

Lastly, the problem of “more hardware is not more better” comes into play. I thought this was common knowledge until Linus’s video about choosing CPUs for their video render server a couple years back, (they have a new one up on Floatplane by the way) and all the Threadripper high core count nonsense started to cause people to cry foul about encoder performance on these rigs – but video encoders and decoders can only scale so far when it comes to CPU thread counts. This can be accelerated exponentially with GPU, but when it comes to direct thread counts on the CPU, X264 specifically stops being viable after 20 threads. This means if it uses more than that, you risk reduced performance and even reduced quality. This is part of what I mean about not running X264 Slow run rampant across a CPU – with a ton of threads that doesn’t help, and it’s far smarter to use CPU Affinity allocation to assign your encoder to specific threads and game to different threads, or to just thread-limit X264 in OBS. I limit my X264 session to 18 threads on my i9-7980XE, and sometimes tinker with using more than the default 3 threads for lookahead for better quality. Arguably, the 18 threads I give it is too much, the FFMPEG command line even tells me that I shouldn’t be using more than 16!

So no, the newest 64-core 128 thread rumored Threadripper is NOT going to magically going to be a X264 Slow monster for 1080p streaming. It’s going to suck unless you’re carefully managing things – and a 10 core CPU with higher overclocks and faster memory would do better.

With all of this, people tend to ask why Slow, Slower, Placebo, etc. even exist then – that’s because X264 is a video encoder that’s been around for a very long time and is used for many purposes outside just game streaming. It was never built for that. There are plenty of offline video mastering scenarios where Slower and individual flags are necessary to get the best result. Hell, there are some BluRay TV seasons encoded with X264!

Conclusion

(Timecode 29:34)

Why do I put myself through the process of making such insanely detailed videos? 5 hour video courses, documenting every detail of capture cards, and now this? I.. don’t know.

The takeaway I really want to give from this video is that while yes, X264 Slow can provide some improved quality gains over Fast or Medium, that isn’t always true, and comes at a ridiculous performance cost that virtually no one should have to deal with. For most people NVENC or X264 Fast is the perfect sweet spot, and most high-end processors should be able to tackle that with minimal issue, depending on the game. Plus, there’s a lot more you can do to optimize your quality than just mess with Usage Presets.

I’ve been wanting to make this video for a long time, but it felt right to get it out following the GN video, and it was fun finally figuring out Netflix’s image analysis. I’ll probably use it for more fun projects in the first place.

Here’s some other videos you might be interested in:

- Nvidia’s RTX NvEnc is beyond impressive… (GPU encoding explanation, x264 Medium Comparison) — https://www.youtube.com/watch?v=-fi9o2NyPaY

- NEW NVENC EXPLAINED, Stream Service Integration & MORE! – OBS Studio v23 Update Guide — https://www.youtube.com/watch?v=kW7Hd7kKGI4

- Old NVENC vs New NVENC – Quality Comparisons, Settings, What You Need to Know! (FULL GUIDE) — https://www.youtube.com/watch?v=6fyP7kg0QAc

Random Resources I’ve referenced (and kept track of) during this project that might be useful:

- https://web.archive.org/web/20120814041026/http://dev.gentoo.org/~beandog/x264_preset_reference.html

- https://web.archive.org/web/20190614232712/https://trac.ffmpeg.org/wiki/Encode/H.264

- https://stackoverflow.com/questions/11269338/how-to-comment-out-add-comment-in-a-batch-cmd

- https://wjwoodrow.wordpress.com/2013/11/22/adjusting-video-contrast-brightness-saturation-and-color-balance-with-ffmpeg/

- https://video.stackexchange.com/questions/20962/ffmpeg-color-correction-gamma-brightness-and-saturation

- http://acceptv.com/en/products_vqa.php

- http://www.solveigmm.com/en/products/zond/

- https://superuser.com/questions/1079403/how-to-run-multiple-commands-one-after-another-in-cmd/1079407

- https://stackoverflow.com/questions/19840960/comparing-psnr-of-two-videos-possibly-with-ffmpeg

- https://trac.ffmpeg.org/wiki/EncodingForStreamingSites

- https://matplotlib.org/gallery/index.html

- https://obsproject.com/forum/threads/comparison-of-x264-nvenc-quicksync-vce.57358/page-4#post-396132

- https://github.com/stoyanovgeorge/ffmpeg/wiki/How-to-Compare-Video